Imagine a household robot tasked with handing you a cup of water. That seemingly simple request actually requires a complex series of decisions: the robot must interpret your words, identify the right object, navigate your space, and complete the task safely. Now imagine the same robot helping an older adult live independently, assisting someone with limited motor skills, or taking on everyday chores to lighten the load for busy families. Unlike one-off commands or tightly defined tasks, these more meaningful roles require robots to understand what people want — not just literally, but in context.

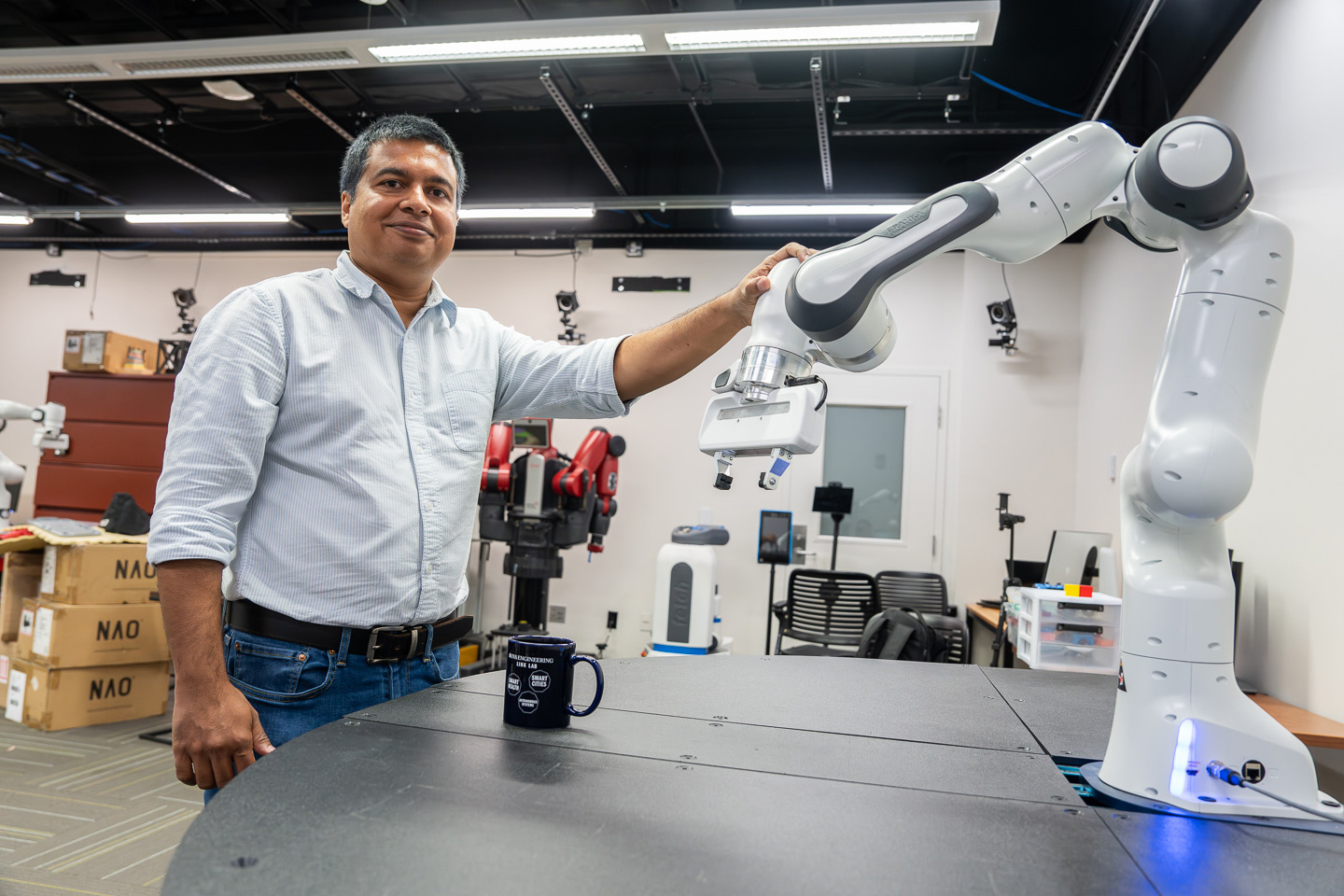

“Suppose you tell the robot, ‘Put this cup onto that table,’ while pointing to the cup and then to the table,” explained Tariq Iqbal, assistant professor of systems and information engineering and computer science at the University of Virginia School of Engineering and Applied Science. “The robot has to combine your verbal message, your pointing gesture, and even your eye gaze to figure out what you mean.” From there, it has to plan and carry out a sequence of actions — locating the cup, picking it up, navigating to the table, and placing it down.

But how does a robot learn how to pick up an object and place it on a surface? And how does it choose the right object and the right surface and place it without knocking it over?

Iqbal directs the Collaborative Robotics Lab at UVA Engineering and recently earned a $630,000 National Science Foundation CAREER Award to develop robots that can understand what people want, learn new skills over time, and adapt to changes in both human behavior and the environment. His research team develops adaptive algorithms to guide robot behavior in response to human behavior, with the goal of creating intelligent, long-term partners that remain helpful and reliable as people’s needs and surroundings evolve.

According to Iqbal, the ideal robot wouldn’t just follow instructions — it would be responsive to changes in both the person and the environment. For instance, if the person starts relying more on subtle cues like eye gaze instead of gestures, the robot would need to adjust. And if the environment changes — say the table is moved to a different height or orientation — the robot must still carry out the task smoothly.

People expect the same response, even if they give the same command in different ways.

“Humans aren’t always consistent in their interactions,” Iqbal said. “A person’s statements or gestures may change from one moment to the next because people rarely repeat themselves in the exact same way. But people expect the same response, even if they give the same command in different ways.”

The prestigious five-year CAREER Award recognizes early-career faculty who demonstrate the potential to serve as academic role models as they lead research advances in their field and provides funding for their key projects.

This project will focus on three goals to address challenges in blending multiple types of input and output for human-robot interactions, said Iqbal. First, it will develop smarter algorithms to help robots understand what people want by combining different types of signals, such as verbal messages, hand gestures, eye gaze, and head movements. Second, the project will develop new learning and planning methods that help robots match what people want to do. Third, the team will design adaptive learning techniques that help robots adapt to changes in how humans communicate to support long-term interactions.

Iqbal plans to give UVA undergraduates and even high school students the chance to be involved in the research process. Additionally, he will incorporate the new machine learning and planning methods into classroom instruction.

The results of this research could shape how people perceive and accept robots as part of everyday life.

“This award is a huge honor,” Iqbal said. “It lets our lab explore how we can create effective and adaptable long-term interactions between humans and robots, maybe for years. The results of this research could shape how people perceive and accept robots as part of everyday life.”

Iqbal envisions that the research could lead to more intuitive and helpful robotic assistants not just in homes but in settings where collaboration, safety, and adaptability may be even more critical, such as warehouse floors or hospital rooms.

Before joining UVA, Iqbal was a postdoctoral associate at MIT’s Computer Science and Artificial Intelligence Laboratory. He earned his Ph.D. in computer science from the University of California, San Diego.